Background

American Healthcare is a mess. While pundits are bickering about who should pay, we think that a good start would be simply for people to pay less. You shouldn’t pay a single dollar more when you can get the same product for less. It would be cool to write an app to find this elusive “better deal” for the user. In this post, we’ll do exactly that and show you how easy it is to write such an app, or, in general, any kind of a comparison shopping app. Hopefully, this will inspire you to create something bigger and “make the world a better place”.

Our app will work like so. The user enters a product of interest (e.g. Advil, a popular American drug used against everything except taxes) in a search box and hits ”search”. The app will then go online to 7 pre-selected drugstores, find pricing and other information about the product, collect it, and present it to the user.

Throughout this post, the presumption will be that the app is for the Android platform, although it can be rewritten for the platform of your choice with little effort (in fact, you can write your app in HTML/Javascript over PhoneGap and by doing so make it available for all mobile platforms at once). Selecting Android simply helps us write the example in a single well-understood language, i.e. Java. We will skip the basic wiring code for text input, displaying results, and activities (which Android’s own tutorials explain much better anyway) and focus on the gist of the endeavor – how to get price information from an Android client.

Choosing the Technology

Server-side Scraping – too onerous

At first, it’s tempting to provide a server backend – after all, all apps like Kayak and Expedia work this way. Good for them, but now estimate how much time it will take you to

- set up a server,

- develop the code to collect the data, and

- how much money it will require to keep the server running and scale it as the app gains in popularity.

All of a sudden, having a server backend doesn’t sound like a fantastic idea anymore.

Embedded Scraping – too slow

All right, what if we scrape target websites directly from the phone relying on something like JSoup? We’ll have the code running purely on the phone, no server needed, so the app will be getting its data directly over the wireless network. This sounds great on paper but falls on its head in reality. Page downloads often spike up to 10 seconds and more when you’re in a busy downtown area. Parsing and filtering the page takes less but still introduces a noticeable delay – around 1-2 seconds. Add multiple pages to the mix and you’re basically looking at a 30+ second search. Hardly appealing user experience. So, what can we do?

Bobik to the Rescue

An elegant solution to this problem is to employ Bobik, a web service for scraping. Bobik employs powerful machinery to perform the work in parallel, supports dynamic websites (i.e. incl. those generated via Ajax), and lets us interact with it using REST API.

Submitting a scraping request to Bobik means that we

- do only 1 HTTP request (to Bobik) and

- don’t download the data we won’t use (i.e. 90% of the page).

This means that we can interact with Bobik from any language and with no concern for how much local CPU/memory/network is required – since all the computational work will be carried out by Bobik.

With the technology chosen, it’s time to get our hands dirty. First, let’s figure out what our data sources will be. There are, among others, two large drugstores in the country – CVS and Walgreens. Let’s start with these 2 and add a few others to the mix later. These will be the drugstores we’ll monitor in our app. To do scraping efficiently, let’s see how these stores present the information on their websites. Once we know that structure, we can write code for it in our main scraping procedure.

Preparing to Gather the Data

Determining Search Urls

If you go to cvs.com and enter a search term (e.g. Advil), you’ll get the following url: http://www.cvs.com/search/_/N-3mZ2k?pt=product&searchTerm=advil. Often, such urls have long tails to account for pagination, tracking and other purposes – that are irrelevant to us. This is not the case here and we can proceed with the url “as is”. Here is the full list or search urls:

http://www.cvs.com/search/_/N-3mZ2k?pt=product&searchTerm=" + encodedKeyword, http://www.myotcstore.com/store/Search.aspx?SearchTerms=" + encodedKeyword, http://www.familymeds.com/search/search-results.aspx?SearchTerm=" + encodedKeyword, http://www.canadadrugs.com/search.php?keyword=" + encodedKeyword, http://thebestonlinepharmacy.net/product.php?prod=" + encodedKeyword, http://www.walgreens.com/search/results.jsp?Ntt=" + encodedKeyword, http://www.drugstore.com/search/search_results.asp?N=0&Ntx=mode%2Bmatchallpartial&Ntk=All&Ntt=" + encodedKeyword

Building Queries

Next thing to explore is how to get to the elements like product image, price, description, etc. Bobik supports 4 query types – Javascript, jQuery, XPath, and CSS. While all these are viable options, XPath is the fastest, so we’ll express our queries in XPath.

Open the page source with your browser’s inspector and study the underlying page structure. There is no “one size fits all” recipe for deriving XPath. A good rule of thumb is to think in terms of “if I were a developer of this website, how often and on what event would I change the structure of each element?”. If you ask yourself this question often enough, you’ll notice that some elements appear less reliable (more prone to be changed) than others. Thus, in composing your xpath, try to be as generic as possible while anchoring around key elements that you don’t expect to change much in the future. Once you figure out which queries you probably need, you can double-check them at http://usebobik.com/api/test before you start coding.

Repeating this approach for all drugstores yields a complete list you can inspect for yourself at

https://gist.github.com/3035521.

Compiling such a list takes anywhere from a few minutes to a few hours depending on how fluent you are with web programming. The good thing about it is that once compiled, this list changes only when the destination website changes its design (which happens only 1-2 times a year usually).

Thinking Ahead

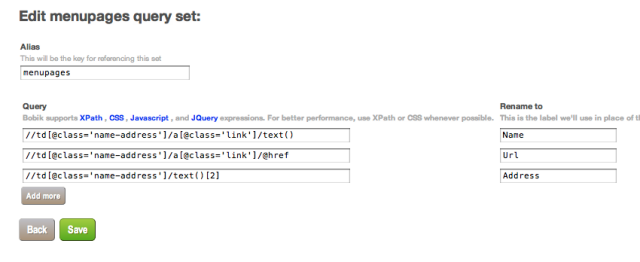

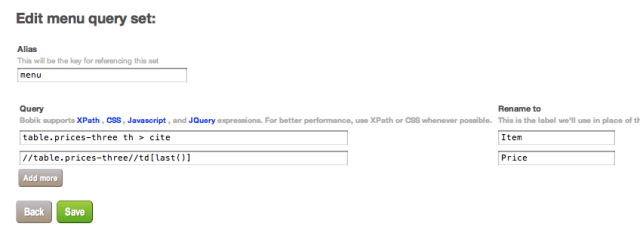

Now that we have all queries in one place, it would be really nice to get away from those verbose strings should one of them has to change once the app is in the wild. Bobik allows you to store queries for later referencing. This has several benefits:

- You get to assign an English name to each query making it more readable and portable.

- You can refer to the entire set by a simple alias rather than having to deal with the full array of queries

- You can ship your code without having to worry about updating queries. This is because you edit the queries via web interface (without updating the alias) while your app pulls them by alias. This gives us an invaluable benefit of modifying all this data via web interface, as some of them will likely change over time.

So, let’s store each set of queries at http://usebobik.com/manage under “cvs”, “walgreens”, and other respective aliases (the list shown at https://gist.github.com/3035521 is already categorized accordingly). In doing so, we’ll also rename each query to one of the following:

- Title – name of the product

- Price – price

- Image – product image

- Link – the purchase link to the original website

- Details – any other information relevant to the listing

Write Code

The code to assemble the table of results will involve several steps:

- Submit a single request with 7 urls to scrape

- Then, wait until results are ready (about 5-10 seconds).

- Perform a few post-download transformations (extend urls, remove garbage, sort by price)

- Display (print) results.

The code is relatively straightforward, though it appears long due to various post-download cleanup of data. In writing the code, we used Bobik SDK.

The crux of it is shown below.

/**

* Searches on the web for various buying options for a given drug

*

* @param drug

* @return An array of hashes containing some or all of the following elements:

* Title - product title

* Image - product image

* Price - generally a X.XX number, although there can be something as ugly as "$6.99\r\n2/$11.00 or 1/$5.99\r\n \r\nSavings: $1.00 (14%) on 1"

* Details - size, weight, and any additional information that could not be categorized easily

*/

public List<JSONObject> findAllOptions(String drug) throws Exception {

// First, find options in the raw form, then clean them up (transpose, normalize) and return

JSONObject request = new JSONObject();

for (String url : getSearchUrls(drug))

request.accumulate("urls", url);

for (String query_set : new String[]{"cvs", "MyOTCStore", "drugstore.com", "FamilyMeds", "walgreens", "CanadaDrugs", "thebestonlinepharmacy"})

request.accumulate("query_sets", query_set);

request.put("ignore_robots_txt", true);

final List<JSONObject> results = new ArrayList<JSONObject>();

Job job = bobik.scrape(request, new JobListenerImpl() {

@Override

public void onSuccess(JSONObject jsonObject) {

// Aggregate results across all search urls

Iterator search_urls = jsonObject.keys();

while (search_urls.hasNext()) {

String search_url = (String)search_urls.next();

String url_base = getUrlBase(search_url);

try {

JSONObject results_parallel_arrays_of_attributes = jsonObject.getJSONObject(search_url);

if (results_parallel_arrays_of_attributes.getJSONArray("Price").length() == 0)

continue; // no priced results from this source

List<JSONObject> results_from_this_url = BobikHelper.transpose(results_parallel_arrays_of_attributes);

// Perform some remaining cleanup

for (JSONObject r : results_from_this_url) {

// 1. Make urls absolute

for (String link_key : new String[]{"Image", "Link"}) {

try {

r.put(link_key, url_base + r.get(link_key));

} catch (JSONException e) {

// continue to the next result if Image or Link is missing

}

}

// 2. Extract price

r.put("Price", cleanPrice(r.getString("Price")));

}

results.addAll(results_from_this_url);

} catch (JSONException e) {

e.printStackTrace();

// continue to the next store if this search url is broken

}

}

}

});

// Feel free to remove this call if you'd rather show results as they become available

job.waitForCompletion();

return results;

}

You can view the full listing at https://gist.github.com/3041523.

Conclusion

The goal of this post was to show how painless it can be to create data-rich apps without running a backend. Outsourcing your data acquisition to a service like Bobik saves you a lot of time. Now, you can now focus on creating the end-user product instead of burying your head deep in developing and tuning the data acquisition channel.